Some colleagues asked me why I’m not retired yet. Good question, and

one I ask sometimes myself! One easy answer is available from

FireCalc. I have a 30% chance of a

successful retirement from here, and a >99% chance in 2030, so here we

go. But that’s silly; it’s not like I’m actually going to stop

working in 2030. I could no more stop working than I could stop

breathing. Why not?

In service to Order

There are people who go through the world experiencing it as it is.

That’s most of us! And nearly all of us have days where we slip and

make things a little worse: we cut someone off driving, or we drop a

piece of litter and leave it there. I figure every human does some of

that, sometimes. But then if you look carefully, you’ll see some

people who reliably add a little bit of order back into the world:

they take a menu from the stack and straighten it; they open the gate

to the park and be sure it’s closed and latched child-proof behind

them; they clean as they go in the kitchen; they pick up litter as

they hike. Listening to a few of these—and they’re over-represented

among my friends—I mostly hear them brush it off. “It’s nothing,”

they say. Or, “It’s what anyone would do.” Empirical evidence says

anyone does not do this. “Okay,” they fall back to, “I have to.”

“I have to.”

I have to too. And for me, it’s a prayer. Every little element of

order I add to the world is one more brick in the wall for the fight

against the last enemy. Every

opportunity missed is a waste of the sunlight that grew the plants

that fed me today.

The Sons of Martha

There’s a name for people who feel like this. Kipling understood, and

told us so in The Sons of Martha.

Canada understands—they had Kipling adapt his poem to the Obligation

of an Engineer (but go read the poem first):

In the presence of these my betters and my equals in my calling, I

bind myself upon my honour and cold iron, that, to the best of my

knowledge and power, I will not henceforward suffer or pass, or be

privy to the passing of, bad workmanship or faulty material in aught

that concerns my works before mankind as an engineer, or in my

dealings with my own soul before my Maker.

My time I will not refuse; my thought I will not grudge; my care I

will not deny towards the honour, use, stability and perfection of

any works to which I may be called to set my hand.

My fair wages for that work I will openly take. My reputation in my

calling I will honourably guard; but, I will in no way go about to

compass or wrest judgment or gratification from any one with whom I

may deal.

And further, I will early and warily strive my uttermost against

professional jealousy or the belittling of my working colleagues, in

any field of their labour.

For my assured failures and derelictions, I ask pardon beforehand,

of my betters and my equals in my calling here assembled; praying,

that in the hour of my temptations, weakness and weariness, the

memory of this my obligation and of the company before whom it was

entered into, may return to me to aid, comfort, and restrain.

What a challenge, to let no bad workmanship pass. What does it ask of

us, if we truly commit to leave a world of proper materials, properly

installed? Constant vigilance, surely. And I find it spills over

into those little prayers of order and of shoring up society. As

Kipling tells us:

Not as a ladder from earth to Heaven, not as a witness to any creed,

But simple service simply given to his own kind in their common need.

Communications

So first let’s pray to Vulcan, ugly god of forge and flame,

And also wise Minerva, now we glorify your name,

May you aid our ship’s designers now and find it in your hearts

To please help the lowest bidders who’ve constructed all her parts!

As we’re lifting off it’s Mercury who’ll help us in our need

Not only as the patron god of health and flight and speed

We hope that he will guard us as we’re starting on our trip

As the god of Thieves and Liars, like the ones who built this ship.

—Steve Savitsky, A Rocket Rider’s Prayer

For all the reasons above, I’m an engineer and I’m an engineer every waking hour. And for reasons I don’t really introspect on, I’m a communications engineer. If the Twelve Olympians descended from their thrones, nearly all of my engineering brethren would follow Athena or Hephaestus. And I love their ideas and their work! But I’d be off with Hermes, messenger and healer and psychopomp. It’s why I’m fascinated by amateur radio as a hobby. It’s why I work on the Internet:

We get to connect people.

It’s why I landed at Meta: WhatsApp, Facebook, and Instagram connect billions-with-a-b of people daily. Billions of expats call home for free using WhatsApp. That’s incredible; that’s a public service on a scale that leaves me nothing for comparison. And it’s the kind of work I expect to continue to do as long as my hands can hold a pen.

In the course of leaving Meta this week, I’ve been answering a bunch

of questions about why—the pay is good, the problems are good,

the Meta Boston people are fantastic. And yet I’m out. Why?

Well, the Gallup answer is certainly part of it: I saw a manager cheat

to win a scavenger hunt contest against his own team, and I started

putting out feelers that night. I was instantly shields down.

What’s that mean? Rands says it better than I can. And, since I

last read and thought about this, there is shields merch.

Rands intends his article for managers, and I’ve benefited hugely

from thinking about things that way. But I want to translate it for

individual contributors: it is helpful to be mindful of your own

attachment to your employer. The abhuman consciousness at the heart

of the corporation would sever your employment without a second

thought—just look what happens with layoffs. Your boss might regret

laying you off, but they wouldn’t hesitate to do so.

To try to find some kind of justice in that employment relationship,

you should do two things:

- Be aware of your market value. This means regularly interviewing,

getting offers. The company pays for Radford surveys; you should

do your own version.

- Maintain your network. The company wouldn’t cry to see you go; it

has no tear ducts. You should similarly be willing to walk away

for an excellent offer. This doesn’t mean you should flit off for

an extra 1% of pay, but it does mean you should know what your

threshold to leave is (mine is about 50% of pay at most gigs) and

be ready to take it. Meanwhile, help other people and ask for help

and introductions from them.

- Know your own “shield” state. If your shields are partially are

fully down, you should know before others do. This lets you

present it properly and well.

The specific colleague who prompted this is clearly shields down and

knows it. Good! But they don’t know their network—despite having a

library of stories of colleagues who left and who probably (based on

symmetry) think well of them—and they have no idea of their market

value. It’s time for them to get some interviews and figure out

what’s possible out there, and they know that. What they didn’t know,

and I’m not sure I communicated, is that they would also benefit from

mindfulness work on exactly why their shields are down. That will

provide a path to fix it in a future engagement.

This is a draft found in 2024 from 2020. I’m glad to have it out

there, but it seems to refer to a “like that” to which I don’t have a referent.

Like a lot of people who end up with a belief like that, I got started

with one—my mother brought me to church and to Sunday school, and my

grandmother put a prayer rock on my bed (you can google for the corny

poem), and my father showed me by his actions that he prayed about his

problems and trusted God to see him through. And I went to

confirmation class and memorized creeds and catechisms. I went off to

college, and believed in theory, in an intellectual sense. I

identified as a Christian, as a Lutheran. Intellectualism and

identity are easy paths for someone like me. But that wouldn’t have

brought me to write what I did today, and I think they skirt the

foothills rather than attacking the central mountain.

What did make the difference? I guess two big forces:

Less important for the truth of this statement, but very important for

how I cope with it, I read some books and I talked to people about

them. “Meeting Jesus Again For the First Time” by Marcus Borg helped

a lot in deconstructing the easy creedal theology of my childhood.

Quite recently, Rowan Williams “The Lion’s World” helped lay out how

the Narnia books can function as a fresh face of God for people raised

in a Protestant context. Some good friends helped too, in

conversation—not all of them Christian, and none with quite the same

understanding as me, but helping make sense together of something

bigger than we can see or directly understand. A bunch of that shapes

the idea that Jesus was from God, and that when he spoke of the

Kingdom of God, he didn’t mean a distant heaven we earn by obedience,

but a way of being in this world available to us now, by our own

inspired choices. Also from that, I understand God in a Lutheran

context, but I have every reason to believe the same love and the same

hope move in the hearts of my Jewish, Pagan, and Baha’i friends, and

many billions of others as well.

More importantly for getting to the truth of it, I hit some hard spots

in my life, and God was there for me. The Footsteps poem is sappy,

but I expect it, or Amazing Grace, were written by people who’d shared

that experience. God’s been there for me on a couple of my worst or

hardest days. Sometimes indirectly—the college chaplain who was

first to call when someone committed suicide outside my dorm window.

Sometimes directly.

Let’s not go into detail here, but I lost some people, I hurt some

people, and I felt very alone. I tried talking to a therapist, and a

support group, and family. I observed in myself many of the stages of

grief, wobbling a lot between bargaining and denial. In that grief, I

called out to God, and I got an answer.

I used to identify with the Puddleglum speech from the Silver Chair.

From memory, “I’m a Narnian, even if there isn’t any Narnia. I’m on

Aslan’s side even if there isn’t any Aslan to lead it.” I don’t feel

like I get to do that any more, no matter how much I like the drama of

the speech. I asked God for help—in among a pile of prayers to help

me forgive or get forgiven or turn back time or otherwise fix the

problem I’d made, I asked for me and some folks who’d hurt each other

to see each other the way God did—seeing all the faults and sins and

mistakes, but loving us nonetheless. It seemed like that would be a

good start to making amends and moving on. I got an answer. I don’t

understand what happened. You could say it was a hallucination in a

time of strong emotion. I wouldn’t, but one could. I saw some things

that don’t make sense to me now and didn’t then. And I saw myself and

the other folks involved as I’d asked for. I saw actions that had

been taken as hurtful as the best that person could do, given who they

were and what they had available. I saw all the possibilities one

could hope for going forward, and all the limits and frailties, and

the worthwhile core.

The experience was stunning. It only lasted a moment, but I can see

it now with what feels like the same clarity. I cannot possibly convey

it in words. A better writer than I has tried in his blog on

Anti-Majestic Cosmic Horseshit,

and I endorse his report of a similar experience. In some ways, it

seems very Jesus-like: It doesn’t give me anything to bargain with or

to change the course of the grief I’m feeling. It gives me one thing

I asked for, but not in a way that’s going to help the plans I had in

mind. As I’ve moved forward, some days the fact of the intervention

has mattered more—someone answered! And some days the content has

mattered more. But on any of those, the sense of seeing myself and

some others with full understanding of our flaws, but with absolute

love, comes through.

I’ve been meaning to learn Prolog for more than ten years. I saw

Jon Millen use it brilliantly back at MITRE, with meaningful

simulations of cryptographic protocols in just a few dozen lines—but

while I could read each line, the insight that allowed their selection

and composition escaped me. I’ve used Datalog for plenty; it’s

great for exactly the problems for which extensibility and federation

make SQL such a pain. Prolog adds all sorts of operational concerns

around space usage and termination. Two recent events nudged me to

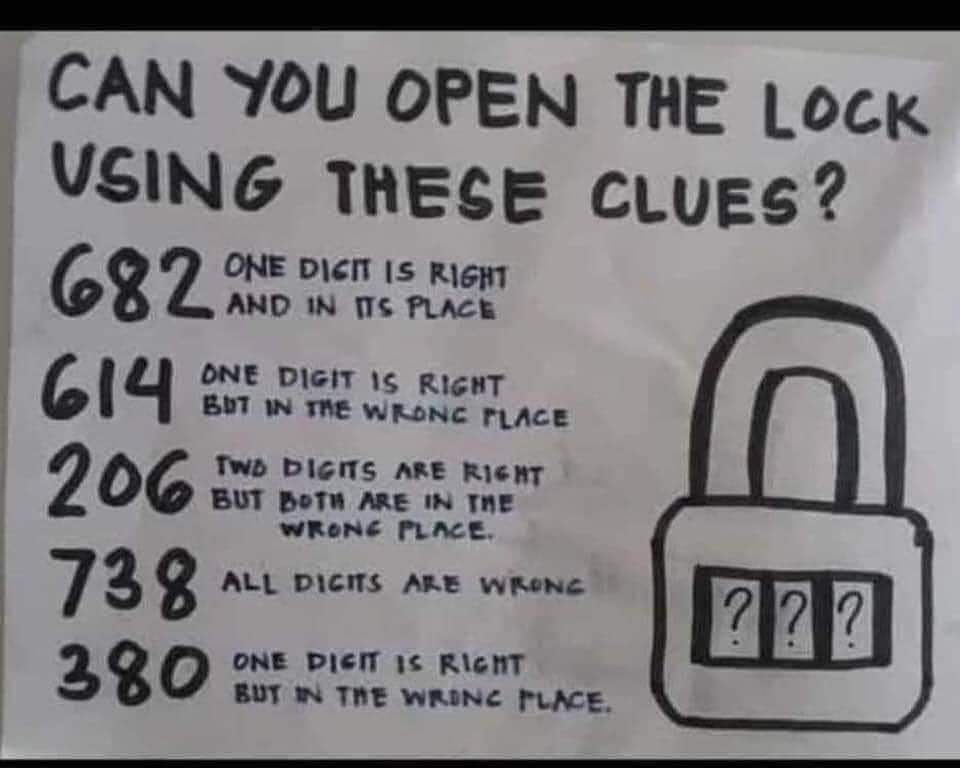

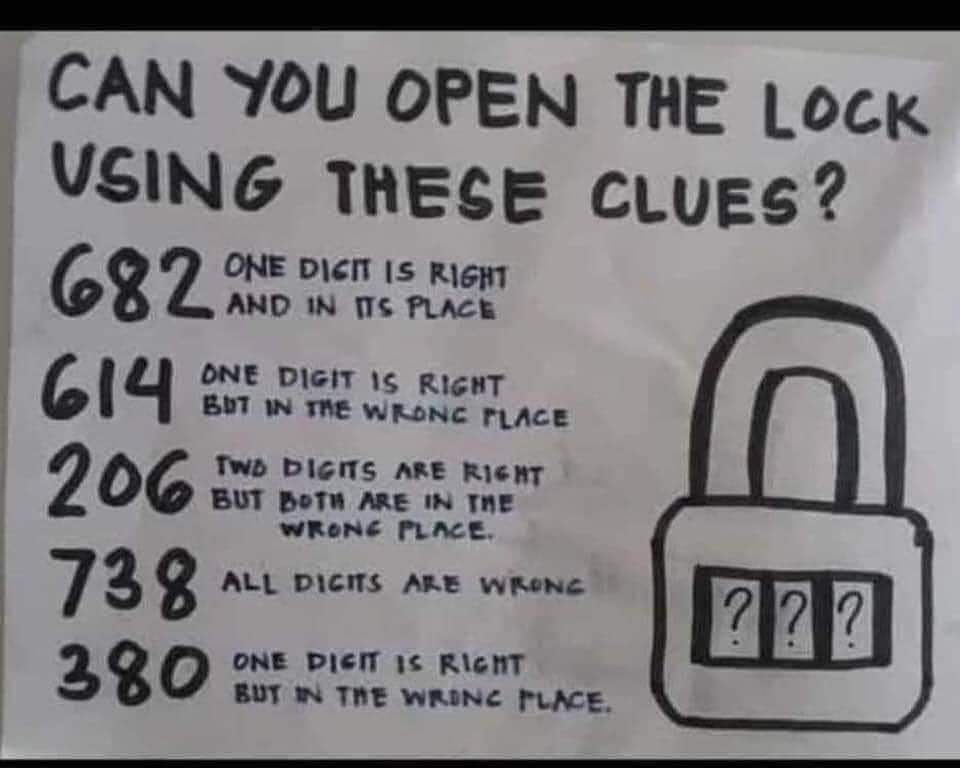

finally learn Prolog: first, a friend posted a

Mastermind-style puzzle. Lots of folks solved it, but the

conversation got into how many of the clues were necessary. I used

the top hit from Googling for “Mastermind Prolog” to play with a

solution, but it felt awkward and stiff—lots of boilerplate, way too

many words compared to a CLP(FD) solver in Clojure or similar. A week

later, Hacker News pointed to a recent ebook on modern Prolog: The

Power of Prolog. I knew a little

about the old way of doing arithmetic and logic problems in Prolog,

with N is X+Y and such; even skimming this book told me that new

ways are much better.

I’ve read the first half of that book, and while I definitely still

don’t understand DCGs yet, I think I can improve on that old

mastermind program.

First, here’s the problem as posed by Jeff:

To start, we can write:

jcb(Answer) :-

% 682; 1 right & in place

mastermind([6,8,2],Answer,1,0),

% 614; 1 right but wrong place

mastermind([6,1,4],Answer,0,1),

% 206; 2 digits right but wrong place

mastermind([2,0,6],Answer,0,2).

% 738; all wrong

mastermind([7,3,8],Answer,0,0),

% 380; one right but wrong place

mastermind([3,8,0],Answer,0,1).

This is a succint and straightforward translation of the problem:

given a guess and some unknown Answer, the mastermind gives us some

number of black and some number of white pegs.

We can write the mastermind program something like this:

mastermind(Guess,Answer,Black,White) :-

layout(Guess),

layout(Answer),

all(Guess,digit),

all(Answer,digit),

count_blacks(Guess, Answer, Black),

count_whites(Guess, Answer, N),

White is N - Black.

layout(X) :- X=[_,_,_].

digit(0).

digit(1).

digit(2).

digit(3).

digit(4).

digit(5).

digit(6).

digit(7).

digit(8).

digit(9).

% check if all elements of a list fulfill certain criteria

all([],_).

all([H|T],Function) :- call(Function,H),all(T,Function).

Now this isn’t so pretty. Having to list out color(red). color(blue). wouldn’t feel so terrible, but having to list out digits

instead of saying digit(X) :- integer(X0, X >= 0, X<=9. seems

ridiculous. Having to write my own all/2 also seems ridiculous.

And the version I got from the Web went on in this style, even to

having lots of cuts—something tells me that’s not right! So let’s get

to rewriting.

First, we can use an excellent library for Constraint Logic

Programming over Finite Domains. And since we know we’ll eventually

want to treat the puzzle constraints as data, let’s make that

conversion now:

?- use_module(library(clpfd)).

% Jeff’s specific problem

jcb_rules([mastermind([6,8,2],1,0),

mastermind([6,1,4],0,1),

mastermind([2,0,6],0,2),

mastermind([7,3,8],0,0),

mastermind([3,8,0],0,1)]).

jcb(Answer,RuleNumbers) :- maplist(jcb_helper(Answer),RuleNumbers).

jcb_helper(Answer,RuleNumber) :- jcb_rules(Rules),

nth0(RuleNumber,Rules,Rule),

call(Rule,Answer).

jcb(Answer) :- jcb(Answer,[0,1,2,3,4]).

We can still address the original problem with jcb(A)., and indeed

that’s a bunch of what I repeated while debugging as I transformed the

program.

The core mastermind program has only a couple changes: Answer

moves to the last argument, for easier use with call and such. The

last line and the digits predicate change to use CLP(FD)

constraints.

% How to play Mastermind

mastermind(Guess,Black,White,Answer) :-

layout(Guess),

layout(Answer),

digits(Guess),

digits(Answer),

count_blacks(Guess, Answer, Black),

count_whites(Guess, Answer, N),

N #= White + Black.

layout([_,_,_]).

digits(X) :- X ins 0..9.

Already I like this better: it’s shorter and it is more useful,

because the program runs in multiple directions!

Now let’s look into how count_blacks and count_whites work. The

first is a manual iteration over a guess and an answer. In Haskell

I’d write this as something like countBlacks = length . filter id . zipWith (==), I suppose—though that would only compute one way.

This can compute the number of black pegs from a guess and an answer,

or the constraints on an answer from a guess & a number of black pegs,

or similarly for constraints on a guess given an answer and black

pegs.

count_blacks([],[],0).

count_blacks([H1|T1], [H2|T2], Cnt2) :- H1 #= H2,

Cnt2 #= Cnt1+1,

count_blacks(T1,T2,Cnt1).

count_blacks([H1|T1], [H2|T2], Cnt) :- H1 #\= H2,

count_blacks(T1,T2,Cnt).

It does the equality and the addition by constraints, which I’d hoped meant

the solver could propagate from the puzzle input (number of pegs) to

constraints on what the pegs are. In practice it seems to backtrack

on those—I haven’t seen an intermediate state offering A = 3 \/ 5 \/ 7.

count_whites has to handle all the reordering and counting. There’s

a library function to do that with constraints,

global_cardinality.

All the stuff with pairs and folds is just to get data in and out of

the list-of-pairs format used by global-cardinality. That function

also requires that the shape of the cardinality list be specifies, so

numlist is there to make it 9 elements long.

count_whites(G,A,N) :- numlist(0,9,Ds),

pairs_keys(Gcard,Ds), pairs_keys(Acard,Ds),

pairs_values(Gcard,Gvals), pairs_values(Acard,Avals),

% https://www.swi-prolog.org/pldoc/doc_for?object=global_cardinality/2

global_cardinality(G,Gcard), global_cardinality(A,Acard),

foldl(mins_,Gvals,Avals,0,N).

mins_(Gval,Aval,V0,V) :- V #= V0 + min(Gval,Aval).

What’s above is enough to solve the problem in the image! But my

friends’ conversation quickly turned to whether some rules were

superfluous. Because library(clpfd) has good reflection facilities,

we can quickly program something to try subsets of rules, showing us

only those that fully solve the problem. This isn’t a constraint

program; it’s ordinary Prolog-style backtracking search. For five

rules, it tries \(2^5=32\) possibilities. It’s slow enough that I

notice a momentary pause while it runs, even with only five rules!

First, it’s weird that there’s no powerset library function. Maybe

I’m missing it?

% What’s the shortest set of constraints that actually solves it?

powerset([], []).

powerset([_|T], P) :- powerset(T,P).

powerset([H|T], [H|P]) :- powerset(T,P).

This uses a weird Prolog predicate, findall, to collect all the

answers that would be found from backtracking the search above, with

all possible rule sets. One of Prolog’s superpowers is that it

handles lots of things in a “timelike” way, by backtracking at an

interactive prompt. When you want to program over those outputs, you

either let the backtracking naturally thread through, or you use

findall to collect them into a list.

no_hanging_goals filters for only those that solve the

problem—“hanging goals” annotate variables that have constraints but

no solution. It’s a bit of a hack with

copy_term,

but it’s documented at the manual page that you can copy from X to

X if you just want to look at the constraint annotations without

really copying the term.

which_rules(Answers) :-

numlist(0,4,Rules),

powerset(Rules,Rule_Set),

findall(Rule_Set-Answer,(jcb(Answer,Rule_Set),

no_hanging_goals(Answer)),

Answers).

% https://www.swi-prolog.org/pldoc/man?predicate=copy_term/3

no_hanging_goals(X) :- copy_term(X,X,[]).

Last, we use findall again to collect all the cases of rules that

work with which_rules, sort by length, and extract the set with the

shortest rules.

shortest_rules(Shortest) :- findall(L-R,(which_rules([R-_]),

length(R,L),

L > 0),

X),

keysort(X,Xsort),

group_pairs_by_key(Xsort,[_-Shortest|_]).

Indeed, it confirms that the first three rules are the shortest set:

bts@Atelier ~/s/mastermind ❯❯❯ swipl revised.pl

Welcome to SWI-Prolog (threaded, 64 bits, version 8.0.3)

SWI-Prolog comes with ABSOLUTELY NO WARRANTY. This is free software.

Please run ?- license. for legal details.

For online help and background, visit http://www.swi-prolog.org

For built-in help, use ?- help(Topic). or ?- apropos(Word).

?- jcb(A).

A = [0, 4, 2] ;

false.

?- shortest_rules(Rs).

Rs = [[0, 1, 2]].

You can try it yourself! Grab the source from

Github,

install SWI Prolog, and let me know what you find.